A Is For Another: A Dictionary Of AI

Statistics and Machine Learning

By Andrea Kelemen

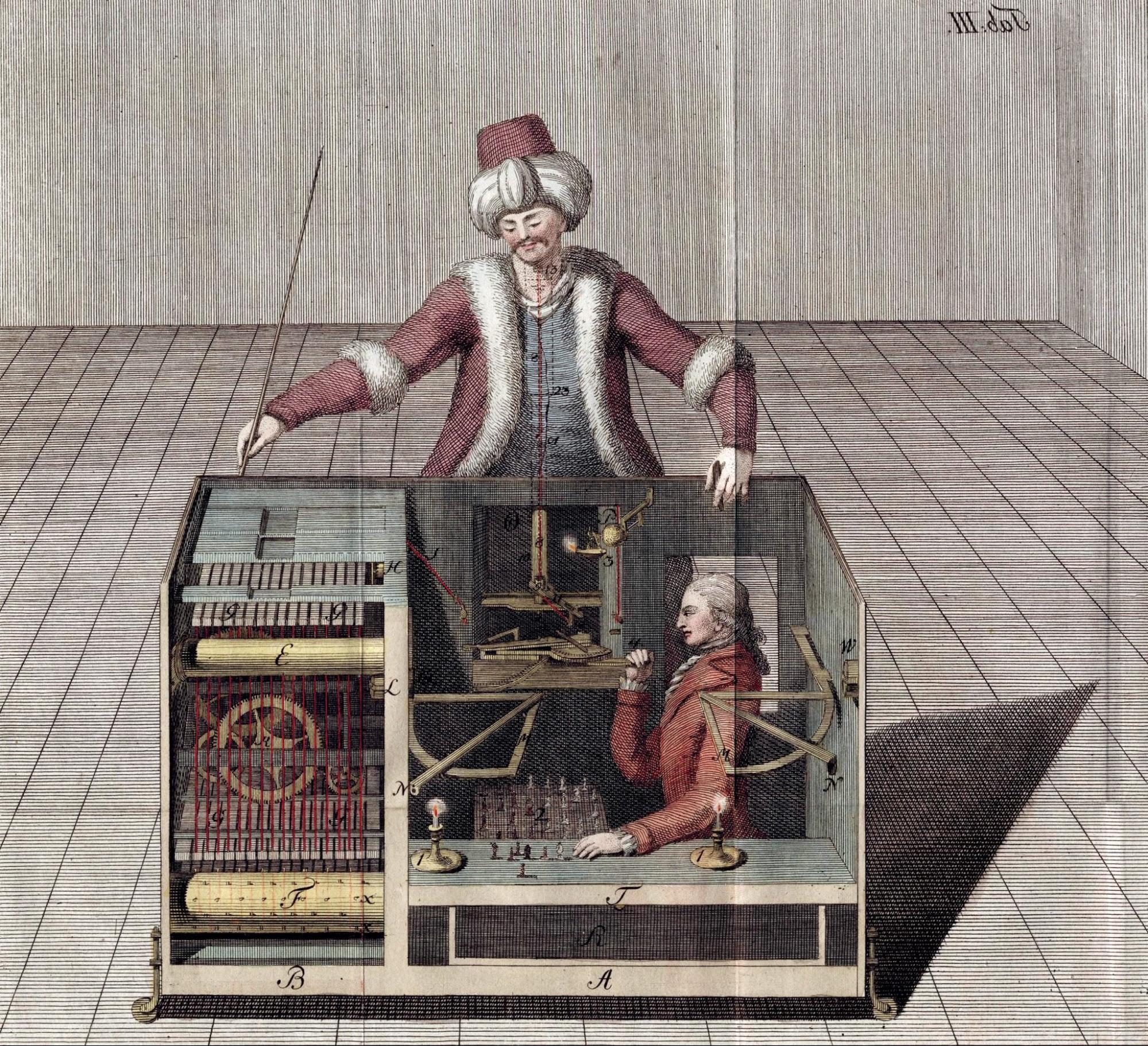

In the 18th century rule-based systems were becoming prevalent. Notable at the time was Pierre Simon Laplace pioneering ‘scientific determinism’; he claimed that if an intellect could know all the forces that set nature in motion and could be intelligent enough to analyse this data, “for such an intellect nothing would be uncertain and the future just like the past would be present before its eyes.” Laplace was also credited with developed Bayesian probability theory, which remains important in data science and modeling. This is one story about how the field of statistics was born.

In statistics, the probability of an event is inferred based on prior knowledge of conditions that might be related to the event. In other words, statistical models make use of rules to correlate seemingly disparate details of dynamic life and introduce perceived certainty to otherwise dynamic and contingent systems Both statistical inference and logical operations are an essential tool of machine learning systems operating in highly contingent environments. As Ramon Amaro explains, machine learning uses statistical models to reduce complex environments by simplifying data into more manageable variables that are easier to calculate and thus require less computational power.

Machine learning is built upon a statistical framework. However, on a more basic level, statistical inference rests on logical assumptions that are questionable. According to the problem of induction, machine learning systems generalise based on a limited number of observations of particular instances, and presuppose that a sequence of events in the future will occur as it always has in the past. For this reason, the philosopher C. D. Broad writes: "induction is the glory of science and the scandal of philosophy." For David Hume, scientific knowledge is based on the probability of an observable outcome: the more instances, the more probable the predicted conclusions. Thus the number of instances perceived correlates to the number of times these instances will appear as the establishment of truth.

With AI and big data, this means we are inscribing our past, often outdated, models into the future by assuming that categories stay rigid and that events occur in the same manner over time. The wide-spread use of logical operations in a growing number of fields that still exist but were previously never automated, we end up running into a whole array of problems. There are mistakes involving uncertainty or randomness, biased data sampling, bad choice of measures, mistakes involving statistical power, and a crisis of replicability